How to Scale Yoast Sitemaps for Large Sites

Note: This post was written in 2020

The Yoast SEO plugin is a great tool for quickly and easily managing many aspects of search engine optimisation on a WordPress site.

However, when using Yoast on large scale publishing sites you will likely run into some significant performance bottlenecks. The most common problem we have had to deal with is the automatic XML sitemap generation offered by the plugin.

Generating all of the required sitemaps for a site with 200,000+ posts is a tall order for any software, and often Yoast falls short at this scale. It tends to generate 5XX server timeouts and unnecessary database load.

It is worth noting that the WordPress core project is looking at solving this, however it’ still some time off, and untested. It’s also worth noting that Yoast are also working to improve the sitemap feature based on their new index feature introduced in version 14. In the mean time, the following will be useful in running Yoast sitemaps at scale.

What are the problems with Yoast Sitemaps?

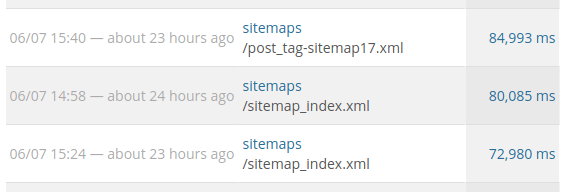

It is not uncommon to have the Yoast sitemaps performing like this:

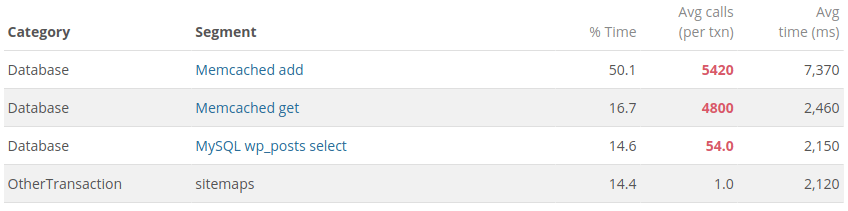

Digging into the stack trace of the failing sitemap calls, you can see a couple problems:

1. There are a large number of SQL queries to pull all of the required information. This is not surprising as the sitemaps are basically a list of all posts on the site, and there’s really no way to avoid this.

2. The large number of queries generate a proportionately large number of object caching requests. We use Memcached as a data store for object caching on most large publishing sites as they live in a multiserver / load balanced environment. This means each of these requests then has some amount of network latency associated with it.

The network latency is only a few milliseconds for each request, but when Yoast/WP_Query is generating tens of thousands of requests, this adds up to several minutes for each sitemap load!

Is there a solution to these Yoast sitemap challenges?

Unfortunately, there isn’t a single silver bullet solution to solve these problems. There are, however, a number of custom tools you can use to get the sitemaps under control.

1. Generate the sitemap index statically

The root/index sitemap is the gateway to the posts/tags/categories/news sitemaps of the site. So it is critical that it loads every time Google crawls your site.

To ensure this happens, here at the Code Company we have created a custom must-use plugin to generate the root sitemap offline via WP CLI. Instead of the sitemap being generated on the fly when a bot hits it, the sitemap XML is already generated and ready to go.

We then configure a cron job to automatically generate these sitemaps on a regular interval:

*/10 * * * * cd /srv/www/oursite/current && wp yoast-sitemap-build-root --url="https://www.oursite.com" 2>&1 2>/dev/null

Check out the code for our WordPress plugin to add this CLI function.

2. Selectively disable object caching

The WP object cache is designed to cache queries to the database. However, in when it comes to generating the sitemaps, it doesn’t help at all. In fact, it is actually a hindrance and causes significant network delays.

Unfortunately, there is no way within WordPress to disable this just for sitemap queries. To get around this, we have set up custom logic to automatically disable object caching entirely when on a sitemap page. This will mean any queries Yoast makes will query the database directly.

This is our custom object-cache.php to implement this logic:

<?php

/**

* Plugin Name: Object Cache

* Description: Object cache backend - uses APC for local development, Redis or Memcached for prod/staging.

* Version: 3.0

* Author: The Code Company

* Author URI: http://thecode.co

*

* @package thecodeco

*/

/**

* Whether the object cache should be enabled.

*

* @var boolean

*/

$object_cache_enabled = true;

// Determine if we are on a URL which is has the object cache disabled.

if ( defined( 'OBJECT_CACHE_BLACKLIST_URLS' ) ) {

// phpcs:disable

$request_uri = isset( $_SERVER['REQUEST_URI'] ) ? $_SERVER['REQUEST_URI'] : '';

$request_path = parse_url( $request_uri, \PHP_URL_PATH );

// phpcs:enable

foreach ( OBJECT_CACHE_BLACKLIST_URLS as $blacklist_regex ) {

if ( preg_match( $blacklist_regex, $request_uri ) ) {

$object_cache_enabled = false;

break;

}

}

}

// Set object cache disabled via constant.

if ( defined( 'OBJECT_CACHE_DISABLE' ) && OBJECT_CACHE_DISABLE ) {

$object_cache_enabled = false;

}

// Load the appropriate object cache.

if ( $object_cache_enabled ) {

if ( defined( 'OBJECT_CACHE_USE_MEMCACHE' ) && OBJECT_CACHE_USE_MEMCACHE ) {

header( 'X-ObjectCache: memcached' );

require dirname( __FILE__ ) . '/memcached-object-cache.php';

} else {

header( 'X-ObjectCache: apc' );

require dirname( __FILE__ ) . '/apc-object-cache.php';

}

} else {

header( 'X-ObjectCache: disabled' );

}We then define OBJECT_CACHE_BLACKLIST_URLS in our wp-config.php, like so:

define(

'OBJECT_CACHE_BLACKLIST_URLS',

array(

'/^\/sitemap_index.xml$/', // Root Yoast sitemap

'/^\/(post_tag|post)-sitemap(\d+)?\.xml/', // Other sitemaps

)

);These regexes will match just the problematic sitemap URLs.

3. Leverage CDN edge caching

Now that the sitemaps are actually loading without timeouts, you can start caching the output. Most of the sites we manage use Cloudflare as a proxy/CDN/WAF, so that is the best place to have this cached.

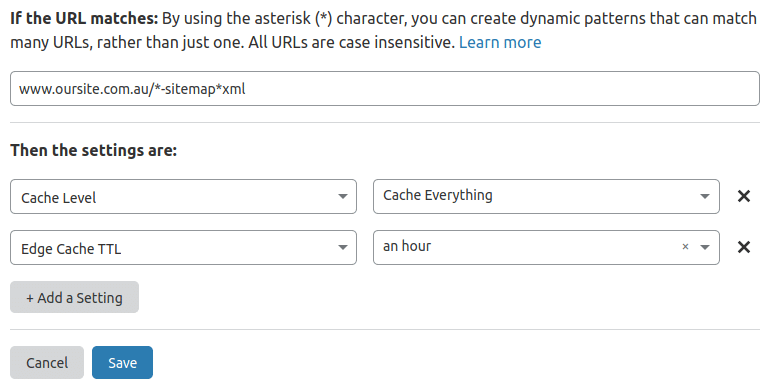

To do this, we set a Cloudflare page rule to specify the edge-caching TTL of an hour for most sitemaps, as they don’t change too often. It is worth noting that some sitemaps, such as the news-sitemap.xml, are meant to be more time-sensitive, so it should have a TTL of five minutes or less.

You could do this with other proxies as well; Cloudflare is just the one we typically use.

4. Pre-caching the Sitemap URLs

All of the post / taxonomy sitemaps will still be generated dynamically when they are hit. They load much faster now with all of our optimisations, however, you can still do better. We can pre-cache all of the sitemaps, so they are ready for Google to digest.

We do this with a smart Bash script that reads the root sitemap and hits each of the child sitemaps until they are cached at the edge.

!/bin/bash

# Automatically hits all sitemaps on a site to pre-cache then at the edge.

# CONFIG

SITEMAP_URL=https://www.oursite.com.au/sitemap_index.xml

# Pull the index sitemap and get a list of all of the individual sitemaps.

echo "PULLING $SITEMAP_URL"

sitemaps=`curl $SITEMAP_URL | awk -F '[<>]' '/loc/{print $3}'`

# Loop over each of the sitemap URLs and Curl it to warm up the cache.

for sitemap in $sitemaps; do

count=0

# Curl the sitemap URL until we confirm that it is cached by Cloudflare.

while :; do

let "count=count+1"

echo "HITTING $sitemap"

result=`curl -sI $sitemap | tr -d '\r' | tr '[:upper:]' '[:lower:]' | sed -En 's/^cf-cache-status: (.*)/\1/p'`

# If URL is cached, move onto the next sitemap.

if [ "hit" == "$result" ]; then

echo "CACHED $sitemap"

break

fi

# If we reached the limit, move on, don't get stuck in an infinite loop.

if [ $count -ge "10" ]; then

echo "REACHED LIMIT"We then run this on a cron job at a regular interval. Like this:

*/30 * * * * bash /root/scripts/sitemap-precache/sitemap-precache.sh > ~/sitemap-precache.log 2> /dev/null &

There’s no silver bullet for making the Yoast sitemaps perform at scale on large publishing sites. However, by using a number of workarounds, improvements and adjustments you can make it work better.